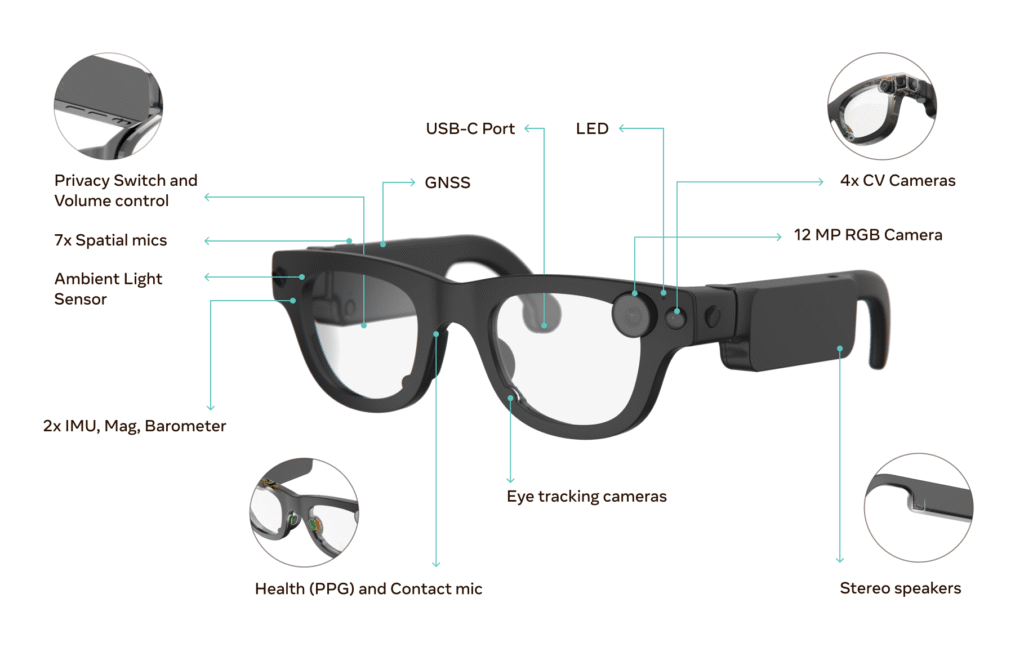

Meta has built a new set of smart glasses called Aria Gen 2. These glasses are meant for research. They help experts learn more about how augmented reality and artificial intelligence can work together. Aria Gen 2 is not for sale. Instead, it is a test platform for scientists and engineers. The goal is to use what is learned here to make future products.

Advanced Eye Tracking That Sees Both Eyes Separately

Aria Gen 2 includes a new eye-tracking system. This system watches each eye on its own. It can tell where each pupil is looking. It also notices when a user blinks. This level of detail helps researchers understand how people pay attention. It can show exactly what someone is looking at and when. That knowledge might one day shape how people control computers with their gaze.

The eye tracker measures the center of each pupil. It does this by shining a small light toward the eye. The light reflects off the surface of the eye. Then a tiny camera inside the frame catches that reflection. Next, the software in the glasses calculates where the pupil is pointed. All of this happens many times per second. It creates a smooth, continuous record of where someone’s eyes move.

When a person blinks, the system knows that no eye signal is present. It uses that information to reset its measurements. Tracking each eye separately helps detect subtle shifts when a person looks from left to right. It can also tell when one eye looks slightly higher or lower than the other. This kind of eye movement can help experts study how the brain controls sight. It can also improve safety for air traffic controllers, drivers, and heavy machinery operators. Knowing if someone is getting tired or losing focus could prevent mistakes.

Four Cameras for 3D Hand and Object Tracking

Aria Gen 2 adds four small computer vision cameras on the frame. These cameras work together to build a three-dimensional picture of hands and objects in front of the user. Earlier models of research glasses had only one or two cameras. These new glasses let researchers see exactly how a hand moves through space.

Stereo vision is the technique used by the cameras. Each camera brings out a particular angle of the same image. By comparing those views, the software figures out the depth for every pixel. In brief, it understands the distance from each part of the hand or item to the glasses. That allows each finger joint to be mapped in three dimensions.

Researchers can use this hand-tracking data in many ways. For example, a robot hand could learn to copy the wearer’s finger movements. A doctor might perform a delicate surgical drill guided by the wearer’s eye and hand motions. A person with limited mobility might use simple hand gestures to control a wheelchair or communicate without speech.

Tracking objects works the same way. A camera system can track a tool, a pen, a cup, or any item in view. That data can help robots pick up the exact item a person reaches for. Or it can help virtual reality systems render an exact copy of a real object in a 3D scene.

Health Sensing Under the Nosepad

These new glasses also include a photoplethysmography sensor, or PPG, in the nosepad. That sensor measures tiny changes in blood volume under the skin. When the heart beats, more blood flows into the blood vessels near the nose. Between beats, there is slightly less blood under the skin. The sensor shines a light into the skin and measures how much light bounces back. Changes in the reflected light track the pulse.

With this capability, Aria Gen 2 can estimate the wearer’s heart rate in real time. Researchers can pair that data with eye-tracking and motion data. For example, if a person encounters a stressful scene in a simulation, the system might detect a rapid heartbeat. At the same time, it might seem that the eyes are focusing on a specific object. Combining those signals can tell scientists how the brain and heart react to visual events. Later, this could help create safer training systems for firefighters, pilots, and other first responders. It also might give new insights into stress, attention, and mental workload.

Improved Audio in Busy Environments

Aria Gen 2 also has a small contact microphone built into its frame. That microphone is designed to pick up the wearer’s own voice. It sits close to the wearer’s cheek or jaw. As a result, it picks up speech even in loud places. This means that if a user is in a busy shopping area or on a crowded factory floor, voice recording remains clear.

In many research scenarios, clear speech is essential. For example, while testing a voice-controlled factory robot, the operator must issue commands without repeating them. The contact microphone means the robot can understand the command even with roaring machinery nearby. Later, researchers hope to use this audio data along with eye-tracking and hand motion. Together, these data streams could teach AI systems how to better predict a user’s intent.

Ambient Light Sensor for Indoor and Outdoor Modes

A new ambient light sensor sits near the right hinge of the glasses. It measures how bright the surrounding environment is. With that signal, the glasses can tell if the wearer is indoors or outside on a sunny day. Many camera and display systems use light sensors to adjust brightness. Here, it can also help software decide how to filter sensor data. As an example, entering the outdoors causes the light to become different in color and strength than it was inside. Oftentimes, the eye-tracking system needs to balance the illumination of the infrared light. Alternatively, the cameras that detect motion may need to change their brightness settings due to glare.

Researchers can also use the ambient light data to learn how people’s eyes move in different lighting. That knowledge could guide the development of augmented reality displays that automatically dim or brighten. It could also lead to ways of reducing eye strain.

Flexible Frame and Multiple Sizes

Aria Gen 2 weighs around 75 grams, which is lighter than most sunglasses. Meta added folding arms to the frame for the first time. Now the glasses can fold up and fit into a small carrying case. This design makes them easier for researchers to transport from the lab to the field.

Meta offers Aria Gen 2 in eight different sizes to fit a wide range of head shapes. Each pair of folding arms has a small mechanism that clicks into place at the temples. Research teams can choose the best size for each volunteer. Those details matter when the eye tracker and cameras need to align correctly with the wearer’s eyes. A pair that is too loose or too tight could shift during use and ruin the data.

What’s Next

Meta says these new glasses are already in the hands of researchers at government labs and academic institutions. Applications will open later this year for more research teams to request Aria Gen 2 units. Meta plans to ship them to universities, medical centers, robotics labs, and other research groups.

Researchers will use Aria Gen 2 to explore AI, robot control, virtual training, and new user-interface techniques. Their work could lead to future consumer products that build on these core sensors. Meta already sells Ray-Ban smart glasses that let wearers take calls, listen to music, and capture photos. In the future, those glasses might gain eye tracking or heart-rate sensing. Meta also plans to release a consumer model called Hypernova with a built-in display. It may gain Aria Gen 2’s advanced sensors in a slim design.

By learning from Aria Gen 2, Meta hopes to refine the hardware and software needed for the next generation of augmented reality glasses. These new glasses could one day let you walk down the street while seeing directions overlaid on the real world. They might help surgeons consult patient data in real time during an operation. They could support factory workers by showing 3D assembly instructions floating just above the workbench. Every improvement in eye tracking, hand sensing, and biometric data helps make those visions a reality. Meta has made it clear that Aria Gen 2 is not a consumer product. It is a research tool designed to accelerate innovation. Still, it offers a clear glimpse of where augmented reality and artificial intelligence are headed. By combining precise eye-tracking, detailed hand-tracking, and subtle health-monitoring signals, the glasses give a level of insight that was impossible just a few years ago. Researchers all over the world will now use those insights to invent new ways for humans and machines to work together.