Microsoft offered its AI technology to the US military, violating OpenAI principles reported by The Intercept. The offer aimed to provide generative AI for military operations, prompting concerns about ethical implications and potential harm.

An reported by The Intercept, Microsoft plans to profit from its investment in OpenAI technology by offering it to the US military for military operations. The technology company held a presentation with the Department of Defense to demonstrate the benefits of generative AI, including analyzing surveillance images and videos and identifying patterns in databases.

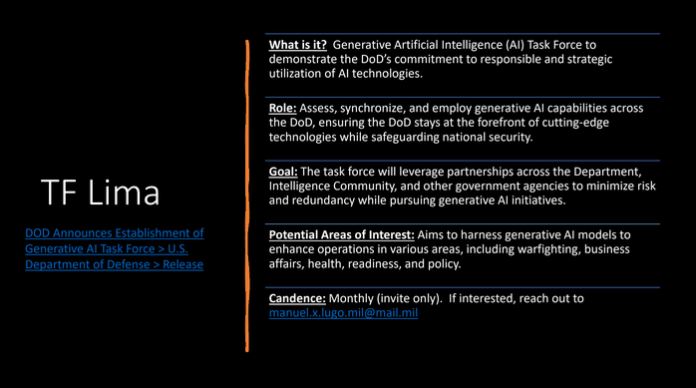

Presentation to Department of Defense

During the presentation, Microsoft showcased real use cases for OpenAI intelligence, suggesting its applications in security purposes, including the analysis of surveillance images and videos, and identifying patterns in databases. Specific tools like DALL-E and ChatGPT were highlighted as potential assets for military use.

“Generative AI can be used to automatically generate written or spoken language, such as news articles, speeches, or data analysis summaries,” (Microsoft mentioned in presentation.)

Usage of Generative AI

Generative AI has emerged as a multifaceted tool with promising applications in various domains. In the military context, Microsoft proposed employing generative AI to revolutionize several aspects of military operations. One of the key applications discussed was the automation of writing tasks, including the creation of reports, forms, news articles, speeches, and data analysis summaries.

Furthermore, the technology was envisioned to play a crucial role in generating images for battle management systems. For instance, DALL-E, an advanced generative AI model developed by OpenAI, was proposed to create visual content that could enhance decision-making processes within military operations.

Additionally, tools like ChatGPT were suggested to streamline communication and document creation processes. By harnessing the capabilities of generative AI, Microsoft aimed to optimize various functions within the military landscape, from information analysis to strategic planning.

Authorization Issue

Microsoft did not seek authorization from OpenAI before offering its AI to the US Department of Defense. Liz Bourgeous, a spokesperson for OpenAI, stated that the company’s policies prohibit the use of its tools to develop or use weapons, injure others, or destroy property.

“OpenAI does not have partnerships with defense agencies to use our API or ChatGPT for such purposes.” (Liz Bourgeous)

Microsoft admitted making the presentation but clarified that the Department of Defense has not yet begun using DALL-E. The technology company claimed to have shown only possible use cases for generative artificial intelligence.

Microsoft’s Commercialization for War Activities

Microsoft’s history of commercializing technology for war activities includes multiple contracts with the US military. The company’s Azure platform has been promoted for defense tasks, enabling tactical teams to achieve greater visibility and make more informed decisions. Microsoft stated,

“Azure enables tactical teams to achieve greater visibility, move faster and make more informed decisions.”

Internal Conflicts at Microsoft

Internal conflicts at Microsoft arose in 2019 when employees demanded an end to a contract with the Department of Defense involving the use of HoloLens in IVAS , an augmented reality system that would improve soldiers’ efficiency in killing enemies. The employees expressed concerns about being hired to develop weapons and demanded control over how their work is used. Employees’ demand in 2019:

“We were not hired to develop weapons, and we demand to be able to decide how our work is used.”

Experts Opinion

Brianna Rosen, a professor at the Blavatnik School of Government at the University of Oxford, highlighted concerns about the potential indirect harm caused by building battle management systems. She said,

“It is not possible to build a battle management system in a way that does not contribute, at least indirectly, to civilian harm.”

Heidy Khlaaf, a machine learning security engineer, criticized the accuracy of DALL-E in generating realistic images, raising doubts about its suitability for military use. Heidy Khlaaf said,

“They can’t even accurately generate a correct number of limbs or fingers; how can we trust them to be accurate with respect to a realistic field presence?”