Google has just announced a major update to Gemini 1.5 Flash. Unveiled at Google I/O, this model marks a milestone in the company’s AI ecosystem and has become one of the best small language models on the market today. Now, after listening to developers and utilizing DeepMind, Google has launched Gemini 1.5 Flash-8B.

Please follow us on Facebook and Twitter.

These Flash versions, like others in the industry, are designed for simple and cumulative tasks. This means they do not require much AI power but can handle a large volume of work while being efficient in energy use and cost. With Gemini 1.5 Flash-8B, these advantages have become even more appealing.

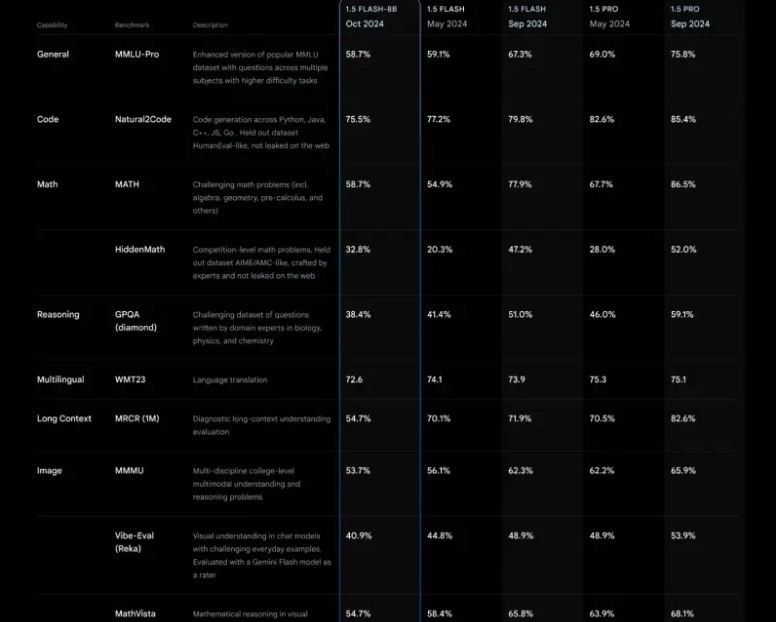

Not only is it much cheaper and more efficient, but Gemini 1.5 Flash-8B also has wider limits and higher speed. It outperforms the previous version in many popular LLM benchmarks. All of this comes from being a smaller AI than before, which shows that Google has optimized its capabilities well.

Gemini 1.5 Flash-8B is now ready for use.

The best part is that Gemini 1.5 Flash-8B is now available. Google has made it accessible to everyone in Google AI Studio and through the Gemini API. This is not a beta or test version; it’s the final, stable model that you can start using right away. It’s likely a better investment than the regular 1.5 Flash without the 8B.

To convince you further, Google has confirmed that this is its lightest and best-optimized AI language model, as well as one of the most advanced in the ‘mini’ sector of the industry. Additionally, it is the cheapest option and performs well in many scenarios. Thanks to its optimization, it outperforms other, more powerful, and expensive AIs in several key areas.

Gemini 1.5 Flash-8B is indeed a strong performer in chat tasks, conversational AI, content transcription, and translating complex and extensive contexts. It seems to be an ideal language model for repetitive and high-volume tasks, such as creating a chatbot for technical support or enhancing a call center with a robust dose of artificial intelligence.

In fact, the key factor is not just its optimization or size, but its price. Gemini 1.5 Flash-8B is Google’s most affordable AI and one of the cheapest on the market today. Additionally, it has doubled its request limit, increasing from 2,000 to 4,000 requests per minute.

The pricing details are as follows:

- $0.0375 per million input tokens (under 128K)

- $0.15 per million output tokens (under 128,000)

- $0.01 per million tokens for cached requests (under 128,000)