Instagram will soon apply stricter safety settings to any account run by adults that mainly shares content featuring children. The modifications are meant to act as barriers to incoming unwanted messages as well as filter toxic remarks on pictures and videos of children. The updates will go live this week in the United States and progressively all over the world in the coming months.

Safer messaging for child‑focused accounts

Parents, talent agents, or other adults who manage accounts with children’s pictures on a regular basis will automatically be put into the most restrictive Instagram messaging restrictions. This implies that a direct message can only be sent by approved followers. Besides, there is a Hidden Words filter which prevents the display of offensive word phrases and emojis in comments or in providing message requests. Instagram will present a prompt at the top of each afflicted feed that clarifies the new settings, and account owners will be told to check their privacy settings.

Blocking suspicious adult interactions

To further protect young subjects, Instagram will stop suggesting these accounts to adults who have already been blocked by teens. The app will also make it harder for those adults to find child‑focused accounts through search. This step seeks to cut off avenues for abusers to discover and target children’s content on the platform.

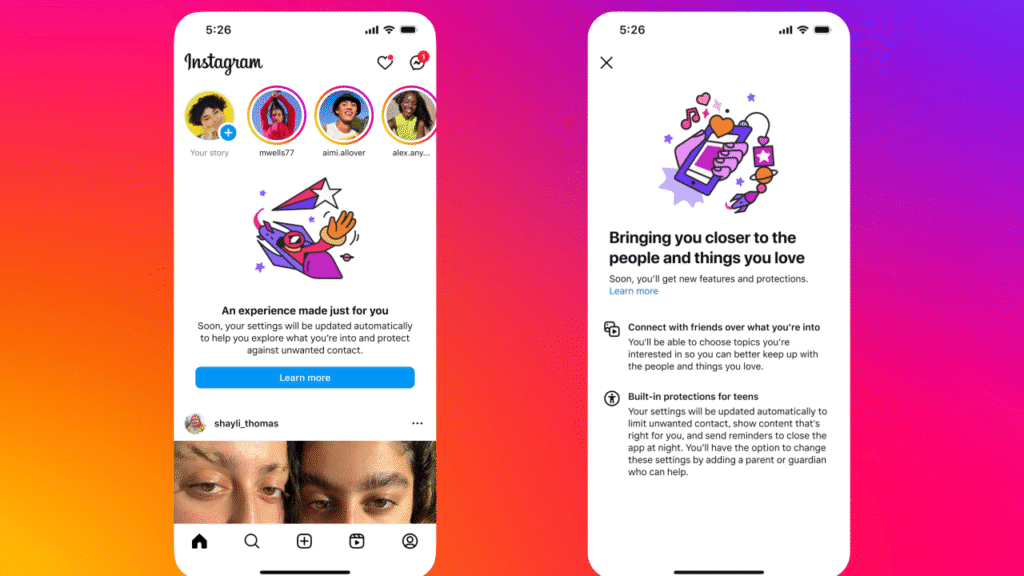

New features for teen accounts

Instagram is adding safety tools to the Teen Accounts experience, which already applies default protections for users under eighteen. When a teen begins a new chat, they will see the month and year their chat partner joined Instagram. This context helps teens spot fake or malicious profiles. The app will also offer combined block and report options in direct messages and display reminders about safety best practices, such as checking profiles carefully and thinking twice before sharing personal content.

Expanded nudity protection filter

Instagram has maintained a blur option for sensitive images in direct messages. The company reports that ninety‑nine percent of users, including teens, keep this filter active. More than forty percent of images blurred by default in June remained blurred by the recipient, indicating that users value the extra layer of privacy.

Instagram’s latest steps come as experts and policymakers raise concerns about children’s safety and mental health online. State laws now require parental consent for minors to use social apps in some areas. Instagram’s new measures for child‑focused and teen accounts demonstrate the company’s effort to balance creative expression with strong protections for its youngest community members.

The company has also removed over one hundred and thirty‑five thousand accounts that attempted to sexualize children and another five hundred thousand associated accounts. These actions and new settings underscore Instagram’s commitment to keeping the platform safer for children and teens alike.