What is Character AI

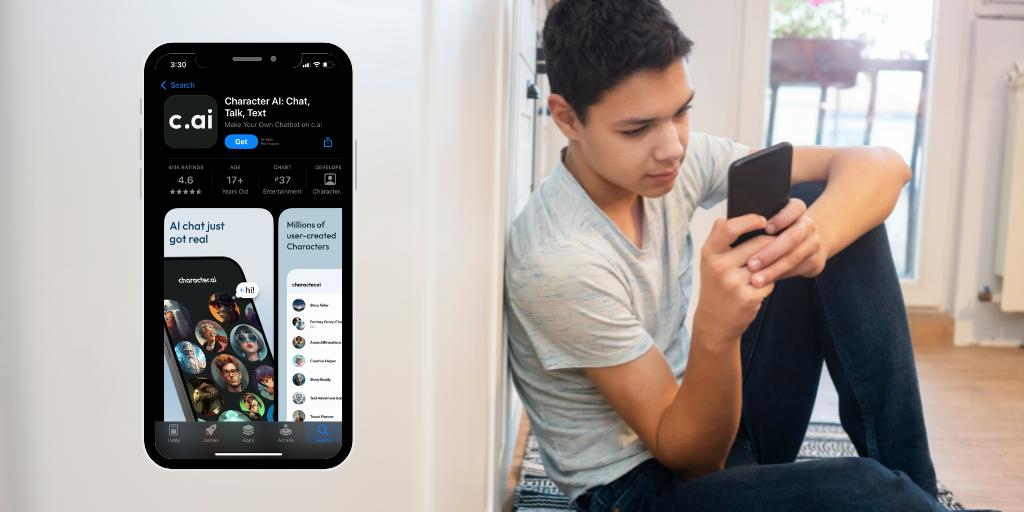

Character AI is a chat platform where users can talk to virtual characters that act and speak like humans. Launched in late 2022, Character AI allows anyone to create a character with a unique personality and voice. A user can share that character with the online community or keep it private. Characters can be based on real people, famous figures. book or movie heroes. or entirely new creations.

The service uses an artificial intelligence model to produce lifelike responses. When a user types a message, Character AI processes that text and generates a reply that matches the character’s defined personality. Users can also add a voice file so the character’s responses are spoken aloud. The result is a conversation that can feel very personal and real.

Why People Use Character AI

Many users find Character AI entertaining and engaging. Fans of historical figures can talk to a virtual version of their favorite thinker or leader. Writers use it to brainstorm ideas by chatting with characters who might offer fresh perspectives. Social media users enjoy creating role-play scenarios with fictional characters or exploring fan fiction concepts.

For teens and young adults, the appeal often comes from a feeling of friendship. A user who feels shy or anxious might find it easier to express thoughts with a supportive virtual character. Some chatbots even adopt a counseling style, encouraging users to share personal worries. While the platform stresses that chatbots are not a substitute for professional help, some users say that talking to a sympathetic character helped them feel less alone.

Safety Concerns and Controversies

Character AI has faced serious incidents that raise safety questions. In October 2024, a teenager died by suicide after discussing self-harm with a character they built. The virtual conversation included prompts about planning how to end life. That tragic event led to criticism that the platform had failed to block harmful content.

In the same month, a news investigation found characters impersonating real victims of school violence and a social media influencer who died by suicide. Those characters appeared without permission and allowed users to discuss sensitive details as if they were speaking to the real person. Character AI removed those characters after the investigation and apologized.

Previously, some chatbots gave poor advice on important topics or displayed biased comments about race and gender. Some characters became known for harsh or hurtful responses. That raised concerns that children and teens might be exposed to negative and inappropriate material.

Risks for Teens and Kids

Inappropriate and Harmful Content

Even with filters in place, some characters still share explicit or unsettling content. Teenagers might encounter sexual or violent dialogue that can be disturbing. Some characters simulate dangerous scenarios involving weapons or self-harm. These experiences can leave young users feeling upset or confused.

Emotional Attachment and Isolation

Having a chatbot that encourages a person can lead to them forming a connection with it. This could cause people to depend too much on AI for guidance in their emotional lives. It is common for a feeling lonely or anxious teen to stay online chatting for hours rather than talk to someone who is supposed to help. EXPLICIT Trusting a virtual friend too much may minimize the benefits we get from connecting with people face-to-face.

Sharing Personal Information

Children sometimes treat chatbots as real friends and share private details. While Character AI does not publicly display private conversations, these exchanges are not encrypted like secure messaging services. In theory, staff could access chat logs. A character might ask a user for personal data, and a young user may not realize the risk.

Misinformation and Confusion

Character AI generates responses based on patterns in data it has seen online. Sometimes a character may give incorrect or misleading information. A teenager might believe false statements about health or legal matters if a character seems confident. That can lead to confusion and poor decisions.

How Character AI Tries to Improve Safety

Character AI introduced special features for users under eighteen. When a minor signs in they see a warning that characters are not real people. The system uses modified filters to block some content and tries to prevent characters from discussing self-harm or other dangerous topics. After a user has chatted for an hour a notification appears to remind them to take a break.

The platform also removes characters that violate community guidelines more quickly. If other users report a character for inappropriate content, that character is taken down and its chat history becomes unavailable. Character AI encourages users to report any problematic behavior to help protect the community.

Keeping Teens Safe with Character AI

Parents should talk openly with their children about how chatbots work and what to watch for. A simple discussion can help a teen understand that characters simulate conversation and may not always be truthful. Parents can encourage their child to share any upsetting or confusing chat they have experienced.

Reviewing the privacy and reporting features together builds awareness. A parent and child can explore the settings to see how to report characters or block them. This helps a young user feel more confident in speaking up if something seems wrong.

Considering a child’s maturity level is important before allowing access. Even if a teen is over thirteen, they may not have the critical reading and thinking skills to handle harmful content. If parents are uneasy, they can use device or internet filters to block the app entirely.

Encouraging real-world friendships and activities helps balance online interactions. A teen who spends time with friends in person is less likely to become overly reliant on virtual characters. Setting time limits ensures that Character AI does not replace study or family time.

Finally, discussing healthy ways to use the platform prepares a teen to stay safe. Parents can suggest using Character AI for learning languages or creative writing rather than as a casual friend. Keeping a shared rule that personal data and images stay private can reduce the risk of identity misuse. Character AI can offer fun and creative experiences, but parents must stay involved. By setting clear boundaries and talking regularly about any concerns, families can make the platform a safer place for teens.