The process of developing AI chatbots and social feeds together with advertising features can exist without requiring Nvidia’s premium devices. Meta is working on a confidential new development featuring its first AI training chip which relies on RISC-V open-source technology. This chip holds the potential to disrupt AI industry practices while offering Meta substantial financial advantages. Here is the inside story.

Why Meta’s Bet on RISC-V Matters

For years, Nvidia’s GPUs have been the gold standard for training AI models. But their sky-high costs and limited supply have left tech giants like Meta desperate for alternatives. Enter RISC-V, an open-source chip architecture that lets companies design processors without paying licensing fees. Meta’s new chip isn’t just about cutting costs, it is about control.

Meta uses RISC-V-based accelerator architecture to make each element of the chip fit its unique operational requirements. Think of it like building a race car from scratch instead of modifying a factory model. Need faster memory for AI training? Add it. Want to tweak how data flows? No problem.

How the Chip Works

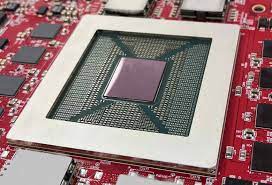

Meta’s chip, developed with Broadcom and TSMC, is built for one job: training AI models. Training requires crunching mountains of data, so the chip likely uses a grid-like design (imagine a city grid where each block processes data) paired with ultra-fast memory. This setup lets it handle complex tasks, like teaching AI to write human-like text or recommend Instagram posts, more efficiently.

Early prototypes are already being tested in Meta’s data centers. Engineers are checking if the chip can match Nvidia’s performance while using less power, a critical factor for a company running billions of AI tasks daily.

The Stakes: Why Meta Can’t Afford to Fail

This isn’t Meta’s first rodeo. In 2022, the company scrapped an AI chip project after it underperformed, forcing a return to Nvidia. But this time, the stakes are higher.

Meta’s AI ambitions are exploding. Its Llama language models power everything from chatbots to content moderation, and CEO Mark Zuckerberg has vowed to create “the most popular and advanced AI products.” Relying solely on Nvidia’s GPUs is risky, supply shortages or price hikes could derail progress.

A functional RISC-V chip serves as a path for Meta to achieve independence from other companies. Meta would be able to execute training at faster rates while operating freely and overcoming performance constraints with its own chip. But if the chip flops? Billions in R&D are down the drain and even deeper reliance on Nvidia.

What This Means for the Rest of Us

The proposed gamble at Meta has the potential to transform current technological market dynamics. The success of RISC-V’s application to AI training would motivate Google and Amazon to copy this strategy and eventually reduce Nvidia’s market control. Open-source chips will let developers build cheaper products that fuel innovative development.

But there’s a catch. Building specialized hardware systems demands significant funds along with specialized engineering experience. Meta’s vast resources beyond matching capabilities allow the company to outpace smaller businesses thus extending industry disparities between big tech giants and startup competitors.

The Big Question: Will It Work?

Engineers at Meta stay quiet about their progress but employees suggest they hold positive expectations regarding the development. The initial testing phase uses real operating tasks through developing smaller AI models that facilitate ad targeting operations. According to Reuters, Meta intends to increase production of custom chips through successful pilot tests to apply them for programs such as Llama 4 implementation. Nvidia isn’t sweating yet.

The GPU dominance of the company continues alongside its exclusive software system (CUDA) which remains unmatched. The recent Meta announcement signals an emerging trend in the market. The AI supremacy competition extends past algorithms because hardware supremacy represents a vital aspect.