The next major focus for tech companies is artificial intelligence models with reasoning abilities. OpenAI took the lead with o1, and now Google has responded with the release of Gemini 2.0.

Please follow us on Facebook and Twitter.

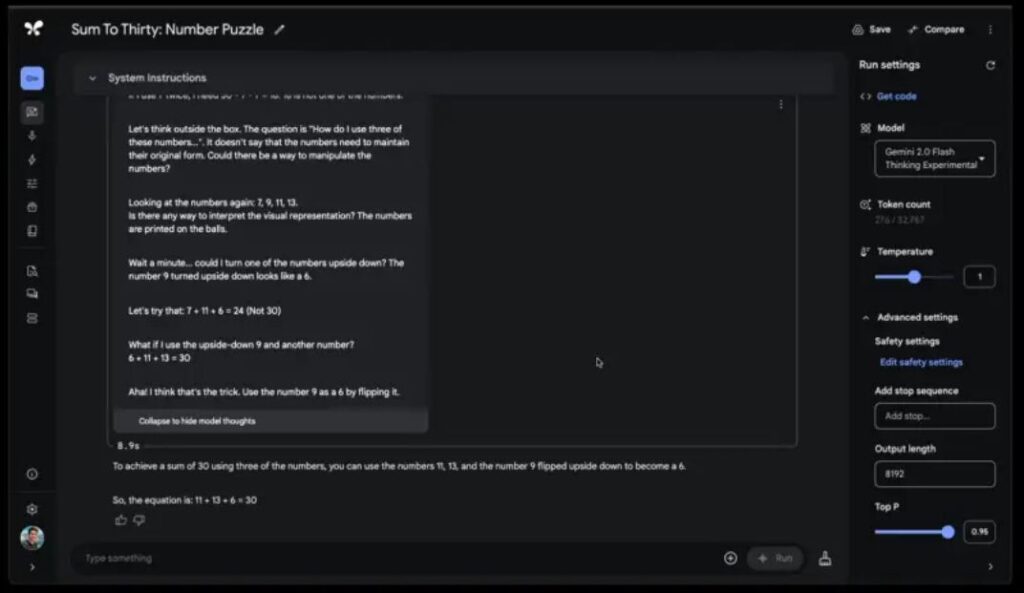

This new version, called Gemini 2.0 Flash Thinking Experimental, is an AI that, as the name suggests, is still in its experimental phase. However, it is now available for free testing through AI Studio.

Unlike traditional AI models that rely on predictive methods to generate responses, models with reasoning capabilities use a methodology that allows them to “think” through their answers. This approach aims to make their responses as accurate as possible.

According to Logan Kilpatrick, AI Studio Lead, Gemini 2.0 Flash Thinking Experimental can create plans and solve complex problems independently, using both visual and image cues. In a brief demo shared by Kilpatrick on X (formerly Twitter), the AI is shown solving a query and displaying the different approaches it analyzed before reaching the final answer.

Since this is still an experimental model, its performance is not yet perfect. However, those interested in trying it out can do so for free through this link or the Gemini API.

Gemini 2.0 now includes a version with reasoning capabilities.

Since OpenAI announced o1 in September, interest in AI models with reasoning capabilities has surged. Reports about Google’s work in this area surfaced in early October, revealing that the company was developing a technology similar to that of Sam Altman’s company. At the time, it was mentioned that Google was using a methodology known as “chain of thought instructions.”

Jeff Dean, one of DeepMind’s leading scientists, shared on X (formerly Twitter) that the new version of Gemini 2.0 is notable for “explicitly showing” its thought process. Additionally, it features a time counter that indicates how long it takes to provide a solution. “Built on the speed and performance of Flash 2.0, this model is trained to use its thoughts to enhance reasoning,” he explained.

The goal big tech companies aim to achieve with these models is to eliminate hallucinations or, at the very least, significantly reduce them. It’s important to note that AIs relying on predictive methods are more likely to generate incorrect answers, especially when handling topics with limited data in their training sets.

Artificial intelligence models with reasoning capabilities often take longer to provide answers because they go through additional steps to “think” through their responses and compare different possibilities. However, this process is designed to improve accuracy. Despite these advantages, there are concerns about whether this technology is scalable, given its higher costs and greater demand for computing power.

It remains to be seen how this version of Gemini 2.0 develops and whether it can compete with o1, which now has an upgraded version integrated into ChatGPT Pro.