A robot able to perform laundry folding combined with lunchbox packing and bottle opening without any previous training exists today. Google DeepMind operates this breakthrough vision using Gemini Robotics and its enhanced version Gemini Robotics-ER as their latest AI models.

The technology enables robots to interpret their environment and work with objects for precise human-level manual operations. This development leads industries together with households toward employing smarter more flexible automation for their daily operations.

How Gemini Robotics Works

The Google Gemini 2.0 AI platform powers Gemini Robotics which merges vision with language capabilities and automated action control to help robots operate in unknown spaces. The model differs from conventional robots which can perform only tasks they were predetermined for. A robot that learns to grasp a cup becomes capable of handling a bottle through its previous training even though it would not have seen the bottle beforehand.

Key Innovations

- Generality: Adapts to unfamiliar tasks using existing knowledge.

- Interactivity: Responds to environmental changes and human instructions.

- Dexterity: Performs precise actions like folding paper or unscrewing caps.

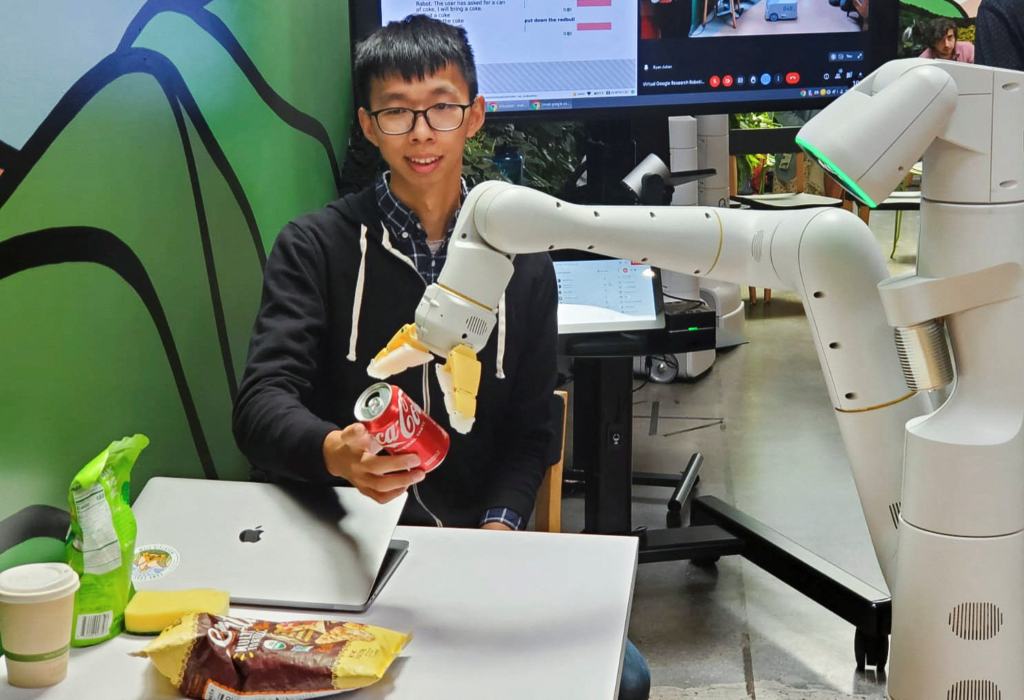

During a demonstration, Google showed a robot folding a piece of paper neatly—a task requiring coordination and spatial awareness. This flexibility is critical for robots working in dynamic settings like homes or hospitals.

Gemini Robotics-ER: Advanced Reasoning for Complex Tasks

Gemini Robotics-ER operates as a second model that solves advanced activities involving substantial planning capabilities. In the task of packing a lunchbox, a robot needs to recognize contents and successfully open them while distributing the contents without contingencies. By analyzing its environment Gemini Robotics-ER determines logical sequences of actions to perform.

Google works alongside robotics businesses Boston Dynamics and Agility Robotics to develop implementations of AI for humanoid robots. Accurate reports indicate robots use their newfound efficiency to execute warehouse tasks and produce better results on assembly lines.

Safety First: Google’s Approach to Responsible Robotics

Token and speed limits serve as safety protocols Google incorporated for preventing accidents. Before performing actions Gemini Robotics-ER conducts risk assessments that lead to it stopping when a human enters its operating area. The company issued robot safety benchmarks derived from the “Robot Constitution” by Isaac Asimov that require robots to always protect human welfare. Researchers emphasize rigorous testing. The system instructs robots to abstain from grabbing sharp materials until directed to handle such items within specific environments. The implemented safety measures serve to establish trust between humans and robots as they become more present in daily activities.

Real-World Applications and Partnerships

The AI technology operated by Google is presently applied. The Apptronik company collaborates with Gemini Robotics for humanoid robot development in logistics operations and Enchanted Tools works on robots that aid healthcare professionals, especially nurses. Robotics technology demonstrates promising outcomes by minimizing repeated task errors and adjusting behavior when encountering unforeseen barriers.

Home robots exist to arrange household items and perform domestic tasks for elderly residents. Factory equipment would benefit from robot assemblies of electronic components and mechanized inspections that require no period of downtime.

The Future of Robotics with Google’s AI

The innovations developed by Google DeepMind show robots are moving into performing operations that computers previously could not execute. Gemini Robotics combines artificial intelligence with physical robot functions to unify digital capabilities with practical operating functions.

Testing activities will drive robots into regular use in manufacturing plants and all the way to personal care facilities. Software updates currently do not grant new functionality to your smartphone but robotic learning capabilities through software upgradation will be achievable in the future.